Facebook Staffs Up

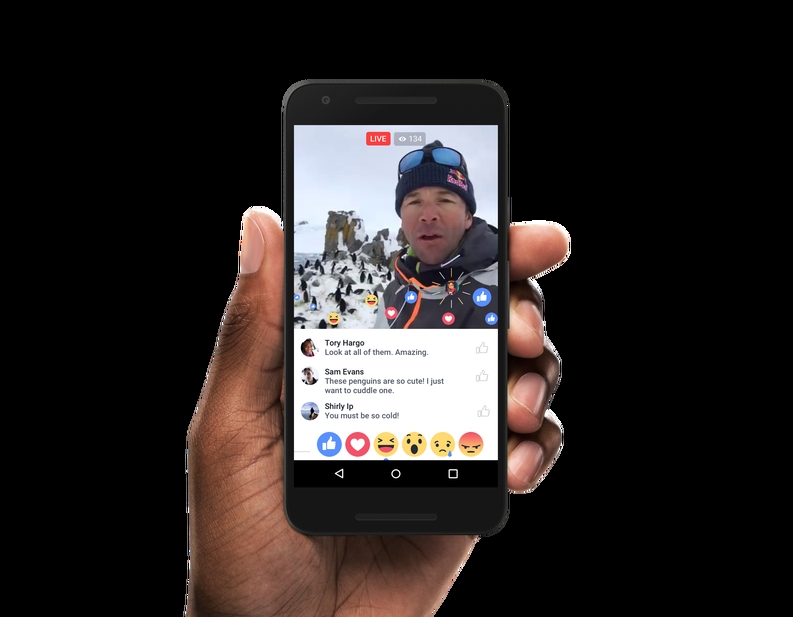

Facebook is taking more action in response to criticism about fake news, offensive posts, and violent videos. The company will hire 3,000 more people to monitor videos, hoping to avoid another situation like the one when a man recorded himself murdering another man.

Facebook is taking more action in response to criticism about fake news, offensive posts, and violent videos. The company will hire 3,000 more people to monitor videos, hoping to avoid another situation like the one when a man recorded himself murdering another man.

In a Facebook post, CEO Mark Zuckerberg wrote,

"Over the last few weeks, we've seen people hurting themselves and others on Facebook - either live or in video posted later. It's heartbreaking, and I've been reflecting on how we can do better for our community.

"If we're going to build a safe community, we need to respond quickly. We're working to make these videos easier to report so we can take the right action sooner - whether that's responding quickly when someone needs help or taking a post down.

"Over the next year, we'll be adding 3,000 people to our community operations team around the world -- on top of the 4,500 we have today -- to review the millions of reports we get every week, and improve the process for doing it quickly."

COO Sheryl Sandberg commented on the post: "Keeping people safe is our top priority. We won't stop until we get it right."

Some say the move reflects Facebook's disappointment in artificial intelligence (AI). The long-term goal is to develop the technology so it can adequately identify and remove inappropriate content. But that may be a way off.

Discussion:

- Assess Zuckerberg's post. Who is the audience, and what are his communication objectives? What works well, and what could be improved?

- What else, if anything, can Facebook do to address these serious issues?